February 5, 2024

Pruning — The Holy Grail of Snowflake Optimization

A detailed guide for reducing Snowflake costs with pruning.

Sahil Singla

Co-founder of Baselit

Snowflake is a powerful platform for storing and managing vast amounts of data, but makes it very easy to run up costs if one is not employing optimal practices. In this blog, I’ll discuss pruning, the holy grail of Snowflake optimization. We’ll cover the following topics:

What is pruning and why is it important?

The concept of clustering and its relation with pruning

Natural clustering in Snowflake

Programmatically identifying the top 10 expensive queries don’t use pruning

Optimization

What is pruning, and why is it important?

Imagine you’re in a huge library with thousands of books. You’re looking for books on gardening, but this library doesn’t follow any specific order — gardening books are mixed with cookbooks, novels, and science journals. You’d have to look through each book to find what you need, which is time-consuming and exhausting.

Now, imagine if you had an awesome librarian who sorted these books into sections and labeled them. The gardening section would be in one area, all neatly organized. In this case, you’d just skip the entire cookbook and novel sections completely. Whoa, that’s a time saver!

This is pruning — saving time by skipping irrelevant sections to find out the information we seek. Snowflake, in this regard, works similar to a library — with tables instead of books. And always remember, in the Snowflake world, time = money.

Clustering and its relation with pruning

Snowflake acts like a librarian, helping to keep data organized, though it requires guidance to achieve near-human levels of intelligence. This process is known as clustering, where we define keys based on our understanding of the data and its usage. For example, in our librarian analogy, the genre of a book would be the clustering key, allowing us to quickly find what we need by skipping irrelevant sections.

Now, let’s apply this concept to a real-world scenario. In many companies, a major source of data is event data. We’ll use this as our case study to understand clustering better.

Note: While event data typically comes in JSON format, we’ll consider an example of relational data for simplicity, and to stay focused on our current topic.

Let’s consider a simple table to hold our event data.

Natural clustering in Snowflake

Our event data arrives daily from a source. Snowflake intelligently recognizes this pattern and organizes the data by date automatically, a process we refer to as natural clustering. This means Snowflake can efficiently cluster data by date without any explicit instructions from us. We’ll explore another feature, Automatic Clustering, in more detail in a future blog post.

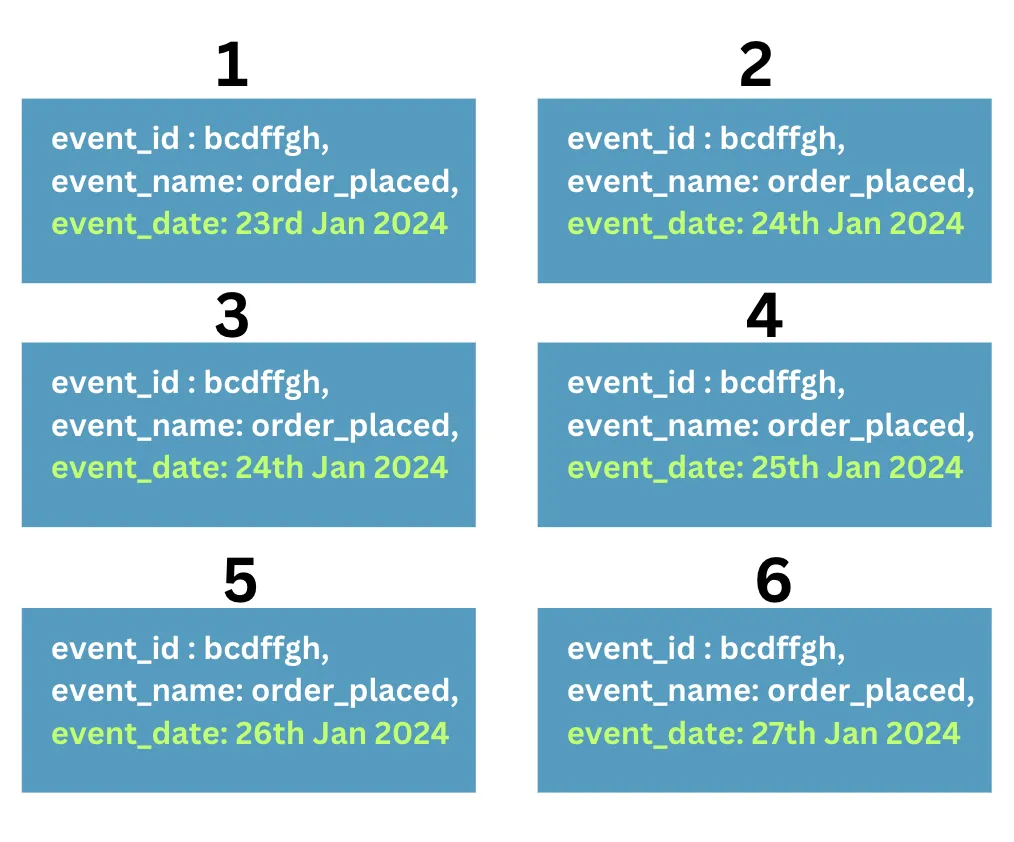

The diagram below shows how Snowflake stores event data in segments called micro-partitions. The key point is how Snowflake uses the dates of events to naturally cluster the data by event_date. When you request data for just the last three days, Snowflake cleverly filters out irrelevant micro-partitions, providing only those with event dates within this timeframe.

Finding data quickly relies on two key factors: first, organizing the data effectively, and second, understanding your requirements clearly. Just as asking a librarian for an ‘interesting’ book isn’t specific enough to save time, similarly, when retrieving data with a SQL query, it makes sense to apply a filter on the event_date column.

Identifying expensive queries that don’t use pruning

(Note: This section requires admin access for Snowflake, and will be useful if you’re looking to optimize Snowflake spend for your entire organization.)

We’ve understood pruning and clustering, but let’s delve deeper to pinpoint expensive queries that don’t utilize pruning. Our focus will be on the top 500 expensive queries over the past 14 days. We will then analyze the query plans of these queries, to identify if there are any opportunities for taking advantage of pruning.

But analyzing the query plans of 500 queries individually through the UI would be cumbersome. So we will adopt a programmatic method to identify the most important queries for review. Examining this smaller number of query plans in Snowflake’s UI would be more manageable.

Note: We lack programmatic access to queries executed more than 14 days ago.

Our strategy will be as follows:

Identify the most expensive queries by calculating their total execution time, considering how frequently they run.

For each identified query, we’ll programmatically retrieve their query plan using

GET_QUERY_OPERATOR_STATS(<query_id>).

We’ll proceed until we’ve identified the top 10 expensive queries that aren’t pruning or have reviewed our top 500 queries.

Here’s the SQL code for Step 1:

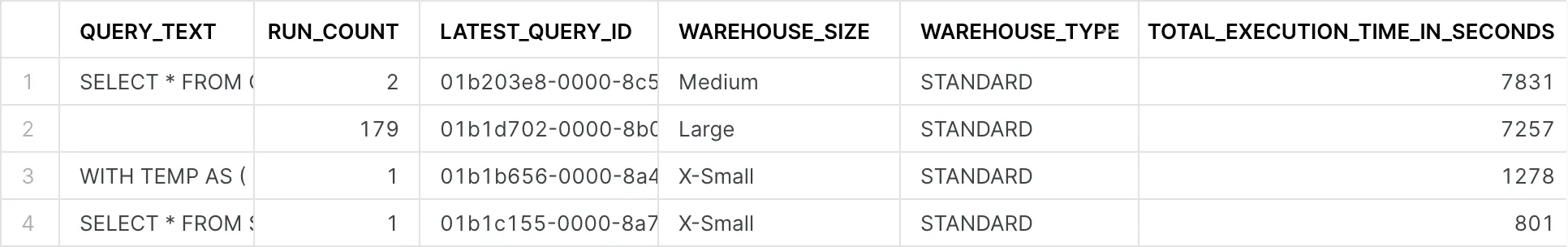

Here are the sample results:

This step has helped us find out expensive queries by calculating their total execution time, while also considering the pricing of differently-sized warehouses.

Step 2: Next, we’ll identify the expensive queries that fail to effectively prune. Since there’s no direct method to access pruning details, we’ll resort to a Python script, to get a clearer structural understanding.

Our goal is to pinpoint the specific TABLE SCAN operation within a query that accounts for over 90% of its total execution time, thus identifying the culprit table. We’ll focus on analyzing the top 500 most expensive queries by cost to gather our insights. If none of these queries are returning rows, it suggests that our organization’s queries are generally well-optimized.

Why the 90% filter? To align with our goal of reducing costs, we’re targeting queries that offer the best opportunities for optimization. The first filter checks if a TABLE SCAN accounts for more than 90% of a query’s total time. The second filter examines whether 9 out of 10 partitions for the table in question are being scanned. If a query doesn’t meet these criteria, it’s not considered worth optimizing. This approach increases our chances of identifying queries where optimizations can have a significant impact.

Below is a brief code snippet for Step 2. The full, detailed code is available at the end of the article, ready to use in a Snowflake Python worksheet.

Show me the money

We now have a list of queries that are ripe for optimization. Unfortunately, there is no way to automate that actual optimization, so we’ll have to get our hands dirty. We have the relevant query IDs and the table names for each query. To extract the query texts, run the following SQL.

Let’s also run this using the table name that we have found.

This SQL query would give us the clustering information of a particular table. If it returns an error, it implies that no explicit clustering key has been set. Note that there could still be a natural clustering key, as discussed in an earlier section.Having identifies the table names, we’ve identified which part of the query needs our attention. By examining the clustering information, we now understand which fields can be leveraged for pruning.

Now, the next step is to refine the query to fetch only the relevant data. If the clustering key is present, use that in the WHERE clause to filter out the data. If a clustering key isn’t available, we can rely on our knowledge of data insertion to use the natural clustering key for filtering. In practice, queries involving JOINS and complex predicates are often major culprits. Despite Snowflake’s innate data pruning capabilities, it cannot intuitively understand every aspect of the data.

Note: If you have followed to this point, and are interested in understanding the patterns of your data and the keys you could potentially use to cluster, check out SYSTEM$CLUSTERING_INFORMATION.